In 2022, newly graduated from UNH with a master’s in health data science, I was brought on board at at the UCSF Memory & Aging Center. Here I had the honor of working with Dr. Jet Vonk and her colleagues in the ALBA Lab.

Dr. Vonk is an assistant professor at UCSF and holds a doctorate in Speech-Language-Hearing Sciences from Columbia University and a second doctorate in Epidemiology from Utrecht University in The Netherlands. Her research work centers on the development of language-based cognitive tools for early diagnosis and longitudinal monitoring of Alzheimer’s and frontotemporal dementia variants.

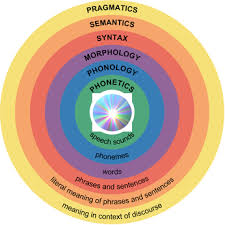

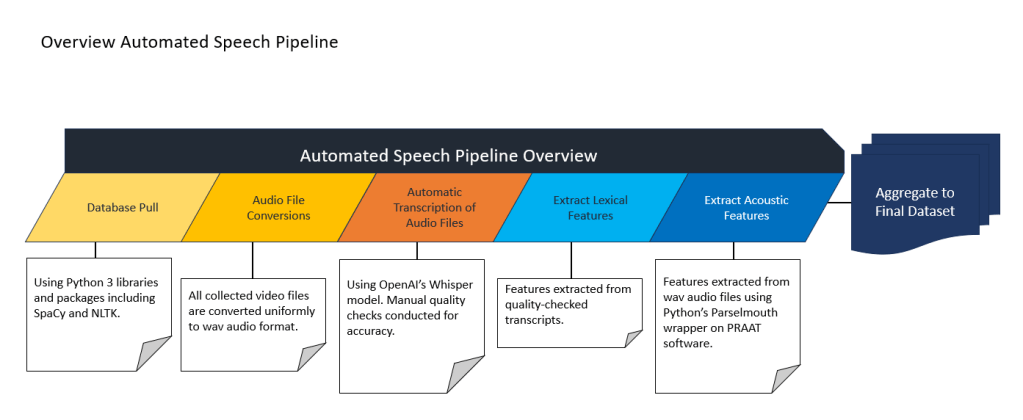

Together we spent the next two years designing and crafting an automatic speech pipeline in Python. As the main programmer for this project, I coded a pipeline that began with processing digital audio recordings of cognitive assessments for automatic transcription and analysis with various natural language processing and computational tools. In this way, we were able to extract both linguistic and acoustic measures from the audio and matching transcripts. The resulting dataset provided a wealth of knowledge for each participant in terms of their speech and language use. Some such informative features included pause rate, noun-to-verb ratio, and average syllable pause duration, and the pipeline can extract over 100 features today. Such measures allow researchers to note differences between different dementia variants and also track the progression of a patient’s disease by changes in their speech metrics between assessments. I was very proud that our work resulted in a publication in Neurology and this work has continued to be used in new projects by Dr. Vonk and her colleagues.